The amount of data we collect is exponentially increasing. Unfortunately, not all of this data is complete. In a world of fill-out forms and manual data collection, incomplete observations are unavoidable. Database developers are painfully aware of this problem. In practice, the most common solution for this particular problem is to mark those missing values as NULL.

What is a NULL value?

A NULL value is a special marker used in SQL to indicate that a data value does not exist in the database. In other words, it is just a placeholder to denote values that are missing or that we do not know. NULL can be confusing and cumbersome at first. But it is imperative for any analyst to know how to handle them. For this reason, I have decided to write some tips and tricks you should know about NULL values.

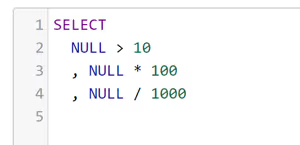

Comparisons and arithmetic operations with a NULL, produce NULL results.

Is NULL bigger than 10? What is 100 times NULL? Can you divide NULL by 1000? The answer to all of these questions is NULL!

If one of your values is NULL, the result will also be NULL… regardless of the other values. Think about it this way: If you don’t know one of the values, you cannot know the answer either.

LEN(0) will catch both NULL and "".

In practice, some of the most common ways to encode unknown text values is to use NULL or as empty strings. In some cases, you might even find data sources that use a mix of both. This is problematic if you want to count how many NULL values are there in your database. Commands like IS NULL will ignore the empty spaces. Luckily, LEN(0) is here to help.

"NULL", "NA", "" might be cast into strings.

Unfortunately, it is very common that when you export data from one platform to the other, NULL values and other special markers (e.g. NaN, NA, Inf) might be cast into strings and treated like any other text field. This is particularly problematic because certain commands like IS NULL or LEN(0) won't work anymore, meaning you should always be skeptical and inspect your data.

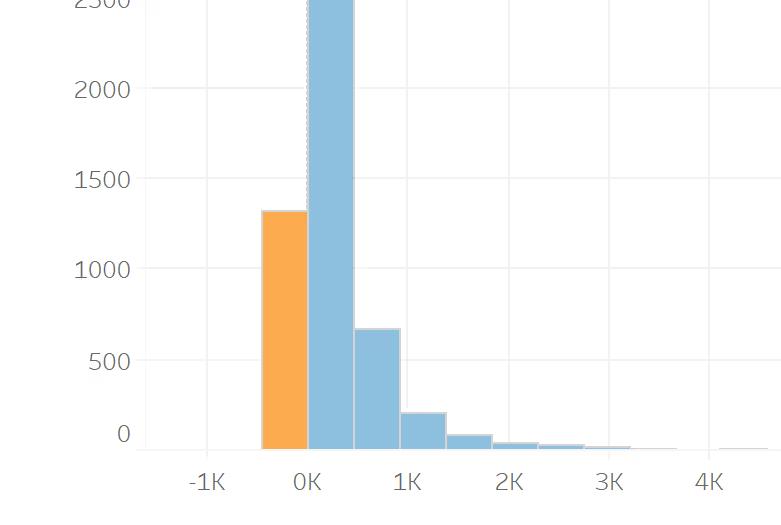

Always check your data with a histogram.

Some people have a nasty tendency to impute the mean or an arbitrary value (e.g. 99, 100, 0, -1) directly into the database. If a value appears suspiciously often or seems impossible; it might be an attempt to input NULL values.

Just to name a couple of examples: I once saw a database where all of the customers who made a purchase before 2005 had an age of 27. In another dataset, I saw time spans of -1 second.

NULL is not always random.

Removing all of the rows containing a NULL value might not be a wise decision. Especially if there is a systematic reason for which they are NULL. In statistics, there is a distinction between data:

- Missing completely at random (MCAR).

- Missing at random (MAR).

- Missing not at random (MNAR).

The implications of believing that your NULL values are missing completely at random can catastrophic for the validity of your analysis. Just to illustrate this, I once saw a dataset where everybody under 18 had a salary NULL. Ignoring those rows would massively increase the mean age of the whole dataset.

Also noteworthy: In some cases, especially when data is missing not a random, a boolean column indicating if something is NULL might be a good feature for a statistical model.

COUNT(*) vs COUNT(1)

Sometimes you might care about the number of rows and sometimes you might care about the number of rows that are not NULL. For those cases, you can add COUNT(*) and COUNT(1) respectively to your SQL queries. In Tableau, COUNT([Column]) and SUM([Number of Records]) will do the same trick.

Missing rows are not NULL

This might sound obvious to some and confusing to others, but it is imperative to remember that missing rows (i.e. rows that are not present in the data) behave differently than a missing value (i.e. NULL).

Imagine you have a shop that never opens on Sundays. When you analyze your daily revenue, there won’t be transaction data for any Sunday (i.e. rows that are not present in the data). By the end of a year, you will have data for roughly 313 days (instead of 365 days).

If you were analyzing data for this shop, there are two things you should know:

- The distinct count of days in your fact table (i.e. table that registers the quantitative information regarding the events that occur in your business) will be equal to the number of days with purchases. NOT the amount of days that have passed since your business opened.

- Using commands like IFNULL or ISNULL won’t have any effect on missing rows (unless you start from a business calendar and left join your fact data in). Those only affect existing rows with NULL values.

The only way to circumvent these limitations is to left join your fact table with a table containing all of the possible dates. That way you will have a row even for the days without transactions.

Thanks for reading this far, I hope this tip was helpful. If you have any questions, thoughts, or remarks about NULL values, feel free to contact me or any of our colleagues. We are always happy to discuss your use cases.

.avif)