Looking to build manual pipelines? Here's why you shouldn't...

Nope. You read that title correctly.

This blog explains why you should not always build your own custom data pipelines. Designing a robust and reliable data pipeline, which can scale with demand is not a simple thing to do, it's time consuming and costly.

The good news is: there are other ways.

Let's dive in!

What is a data pipeline?

A data pipeline is a series of processing steps with 3 main components.

Extracting the data from the source system, Transforming the data and loading the data into the destination system. In this order, it is commonly referred to as ETL, transforming the data whilst it is in transit and hasn’t yet been loaded into the destination.

Another option is to transform the data after it has been loaded into the destination, commonly referred to as ELT. We'll explore the pros and cons of both methods in another blog.

Typically data pipelines are set up to move data from business applications or OLTP databases to a data warehouse, to use for analytics. Once the data from your various applications are in your data warehouse, it becomes a lot more valuable.

By joining your different data sources together, creating KPIs, metrics and dashboards you can make better data led decisions, resulting in better growth of your business and discovering new business opportunities.

By reducing time to insights with robust data pipelines and analytics processes, you can quickly adapt to market changes and trends, making you more relevant, competitive and profitable.

How can you create a data pipeline?

When you start looking at the market for how you can build out your data pipelines, there are so many different options.

Which do you go for…

- Open-source vs managed?

- Code vs low/no code?

- On-premise vs Cloud?

Building your own data pipeline might sound like the best idea at first; you get full control over what data you bring in, what you do with it in transit, customise it however you want and choose what infrastructure you want it to run/scale on.

It could possibly even save you money in the long run over a managed solution, however, there are several reasons why you shouldn’t build your own.

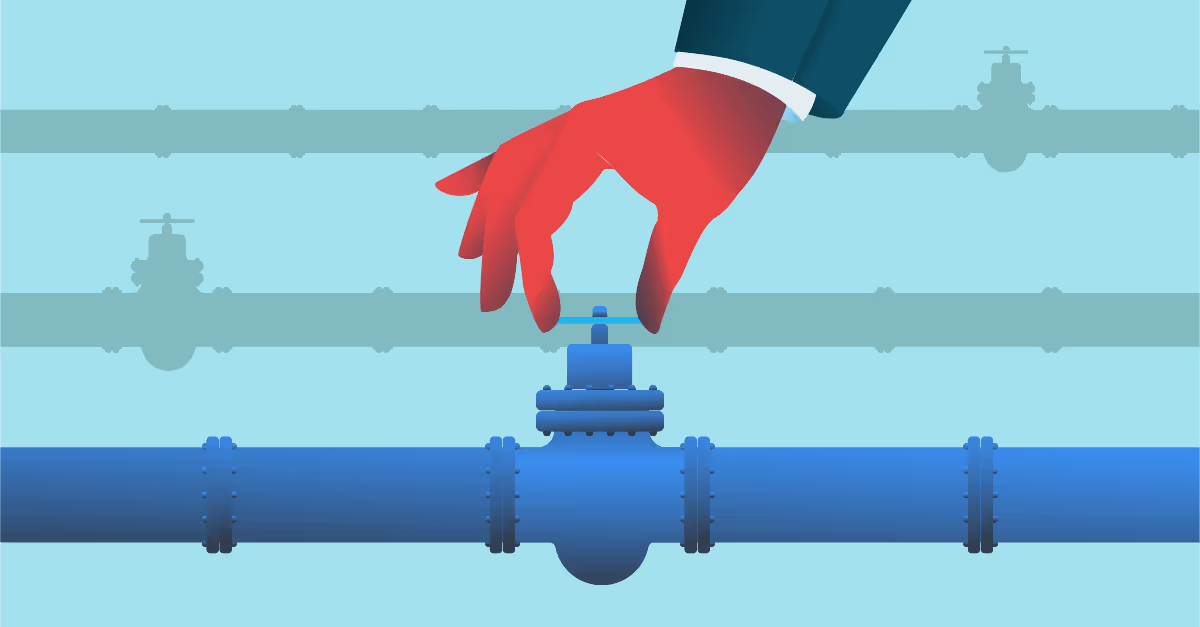

Why build automated data pipelines?

Designing a robust and reliable data pipeline, which can scale with demand is not a simple thing to do, it's time consuming and costly. You first have to choose which language or open source tool you want to use.

You need to learn the new tools or methods needed to pull and push the data. You need to architect the infrastructure that the pipelines will be running on, so that it has enough compute resources to run on normally and be able to scale at peak loads.

If you are using APIs to collect data, they aren’t all built well or are well documented, which adds to the complexity as well. This can be done by a single person, but is more typically done by a whole team of people.

Although you are saving money by not paying for a tool, you are paying more for experienced people to build and manage the data pipelines.

Once the data pipeline is built and is running, you also need to monitor it to make sure that it is fully working as expected. You should always expect there to be issues and have people monitoring your pipelines, because errors or bugs can cause data inconsistencies or even data loss.

Data issues coming into your warehouse will cause problems downstream in your data analytics/science streams, which will need to be fixed ASAP, so that the business can keep making data led decisions.

With your own data pipelines, this will be up to your own team to diagnose the issue and fix it. This also comes at a cost to the business, as you will need to hire people to be constantly monitoring your data pipelines.

What do we recommend?

Using a third party managed tool will save you lots of time in the long run, and potentially even some money. These tools typically come with many connectors out of the box, allowing you to connect to hundreds of source systems and move the data into various destination warehouses.

They are also monitored by the provider, so that if a new API endpoint gets added or gets changed, then you don’t have to worry about it. You can also get your data in fast, with low latency, and they are built to scale with demand. Being able to load in your updated/new data on a daily basis or as quick as real-time ingestion.

By using a third party tool, you also free up your data engineers to do more important jobs. Do you want your whole data team to be focussing on just getting your data into your warehouse consistently and on time?

No, you want them to be adding additional value to the data; modelling it for analysis, running models or helping to build out your core product.

Building your own data pipelines has some benefits, but unless you have a big data team and are willing to dedicate all of that time and resource to building and maintaining your own pipelines, then go for a managed solution.

Tools to build automated data pipelines

There are many tools available, but ones we love to use are Rivery and Fivetran. It has over 200 fully managed connectors that you can set up in minutes. It can handle automatic schema migrations, so that you always have the latest data model.

If a connector isn’t available, you have the ability to create your own using APIs. These still include some of the managed benefits, such as schema migrations if you modify your code, monitoring in the Fivetran platform and more.

It even integrates well with dbt to run your transformation models. All of this reduces time to insights and increases your ROI on your data.

To find out more about how you can automate your data pipelines, get in touch with the team.

.avif)

.avif)