Tableau Prep recently made it possible to write back to databases. At this point it only supports the following:

-

SQL Server

-

Oracle

-

PostgreSQL

-

MySQL

-

Teradata

-

Snowflake

-

Amazon Redshift

Want to write back to Google BigQuery but can't find it in the list above? Look no further, because here at Biztory, we've come up with a solution that bypasses the missing native functionality in Tableau Prep.

As you might know, Tableau Prep is comprised as two products: Tableau Prep Builder and Tableau Prep Conductor. Let's dive into these first.

Tableau Prep Builder

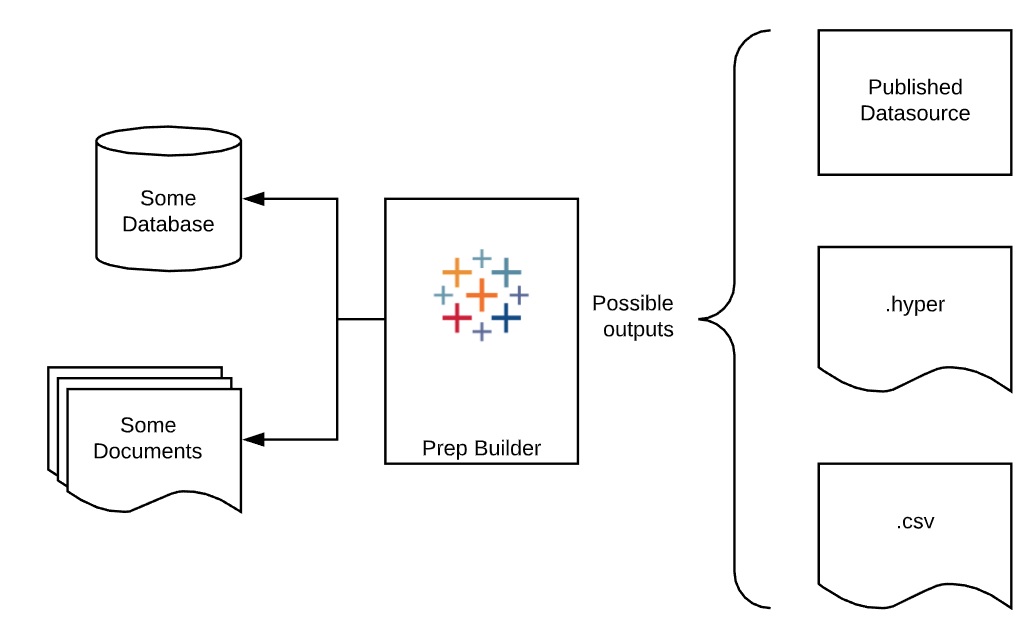

Tableau Prep Builder is an application that allows people to combine data from all kinds of data sources, join and prep them, and eventually write them to an output file, either locally or as a published datasource on Tableau Server.

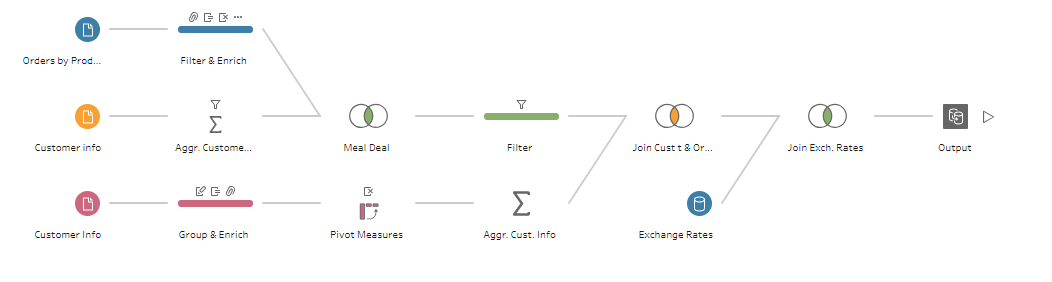

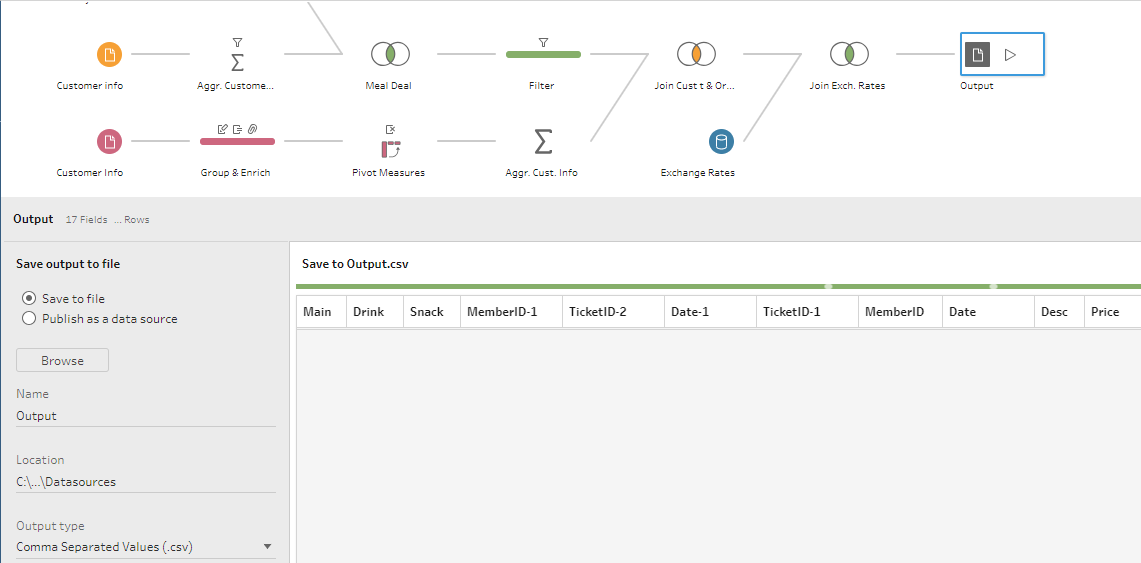

What's nice about Tableau Prep Builder, is that it lets users prep their data in an easy and interactive way. Below, we see a flow that uses different input sources (Google Sheets, a database and a static csv) that are cleaned, aggregated and joined all together to be written to an output. This output node can either publish the datasource to Tableau Server, or store it locally as a hyper or csv file.

Tableau Prep Conductor

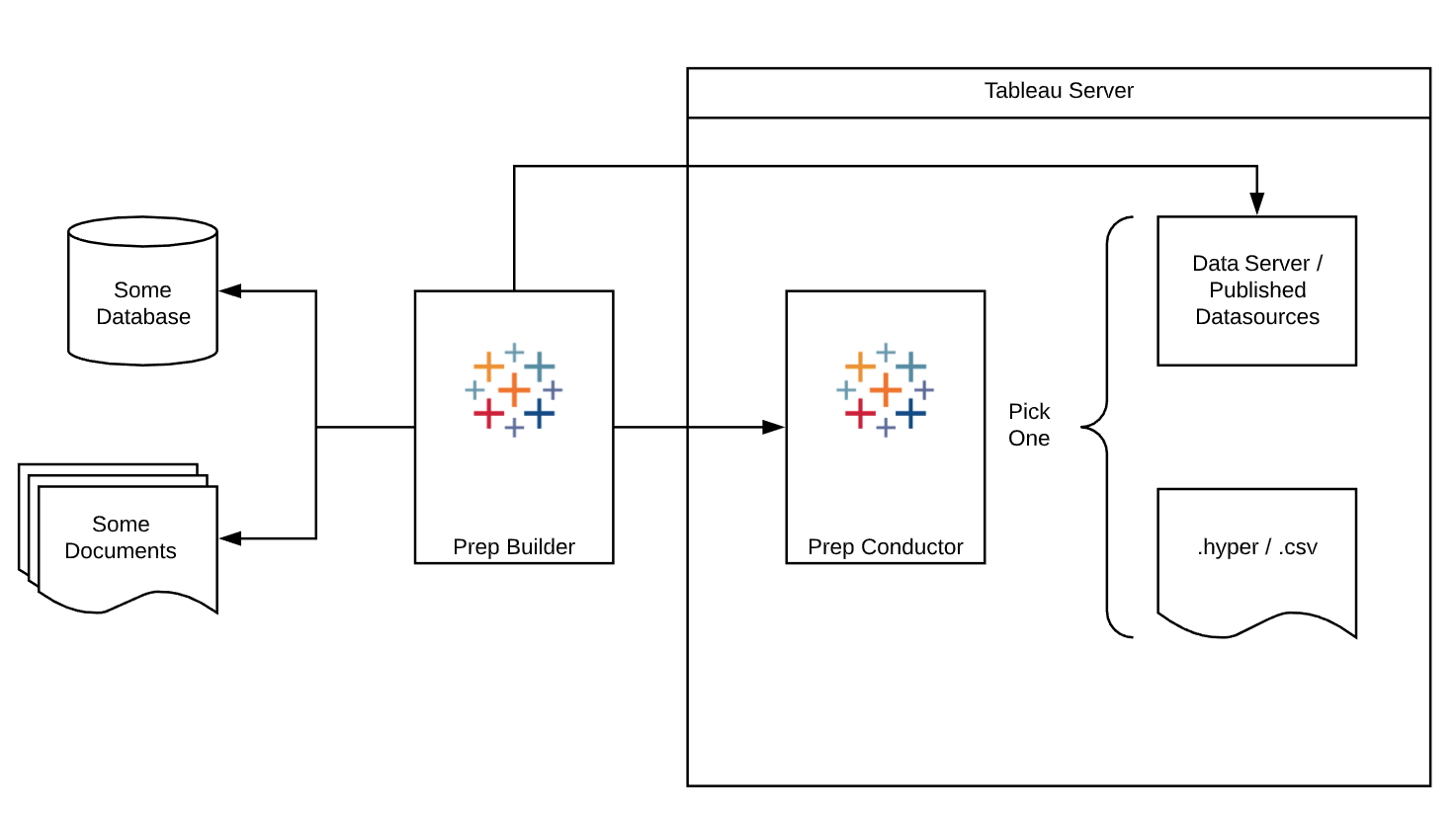

Tableau Prep Conductor allows users to publish their flows to Tableau Server, so these can be ran and scheduled from Tableau Server. Now, with Tableau Prep Conductor, we similar output options as with Tableau Prep Builder. As shown below, after publishing a Prep flow from Tableau Prep Builder to Tableau Server, Tableau Prep Conductor can now in turn store the output data as a published datasource on Tableau Server or Prep Conductor can store it as a hyper or csv file locally.

Now, all these output options are nice, but what if we want to store our data into a database or data warehouse to start using the cleaned data at scale? Nor Tableau Prep Builder or Tableau Prep Conductor supports writing back to Google BigQuery natively yet. However, at Biztory, we're always thinking outside the box to build solutions that seem impossible initially. This is where the exciting part begins!

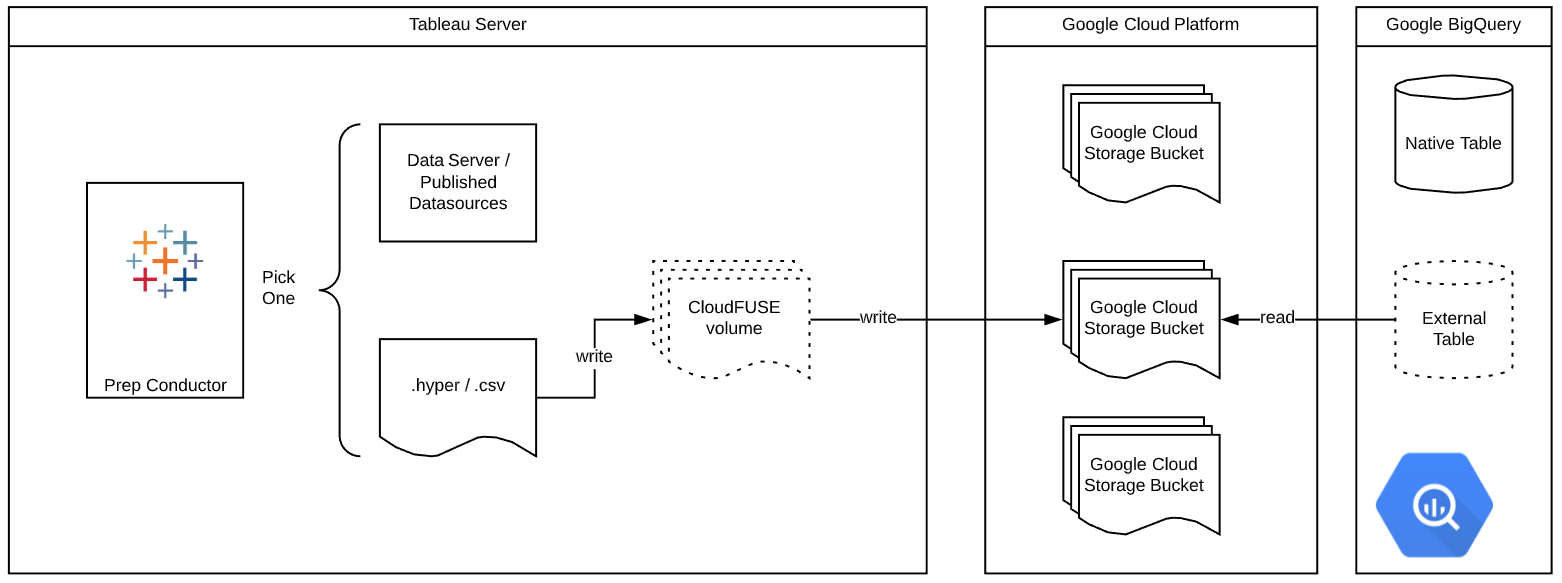

At Biztory, we're working with Google Cloud Platform, which integrates very well with Google BigQuery. This got us thinking: How can we get our prepped date into our Google BigQuery data warehouse so we can share and analyse this at scale? As we've explained above, we have three options to work around the limitations: publish as a Tableau datasource, save locally as hyper or save locally as csv. The former two are currently incompatible with BigQuery. The latter, i.e. output as csv, might, since we came across the option to load CSV data from Google Cloud Storage after some online research.

Two out of three boxes are now ticked: writing to a local csv file on the server where our Tableau Server instance is installed and inserting data from a Cloud Storage csv file into BigQuery. The missing link? Getting the csv file from a local folder on our server to Cloud Storage. We will use the open source fuse adapter Cloud Storage FUSE that allows us to mount the Cloud Storage bucket(s), used to insert data into BigQuery, as file systems on Linux or MacOS. Notice that there is no supported solution for Windows yet, but one option would be to mount the drive with FUSE on Linux and to share it through NFS. That way, the bucket could be mounted on a Windows VM as well.

Our solution is shown in the overview below: Tableau Prep Conductor writes to a mounted Google Cloud Storage Bucket through FUSE, which in turn is picked up by BigQuery and inserted in a data warehouse table.

Now, the question that remains, is how Tableau Prep Builder users can write to a mounted folder on a server that is not available on their computer. Also to tackle this problem, we've got you covered. This only requires some manual adjustments in the JSON flow file underneath the entire .tfl or .tflx file. The following steps should get you all set:

1. Create your flow in Tableau Prep Builder. Make sure all the input connections are accessible by Tableau Server too and that Save to file is ticked in the output step, as well as Comma Separated Values (.csv) under Output type

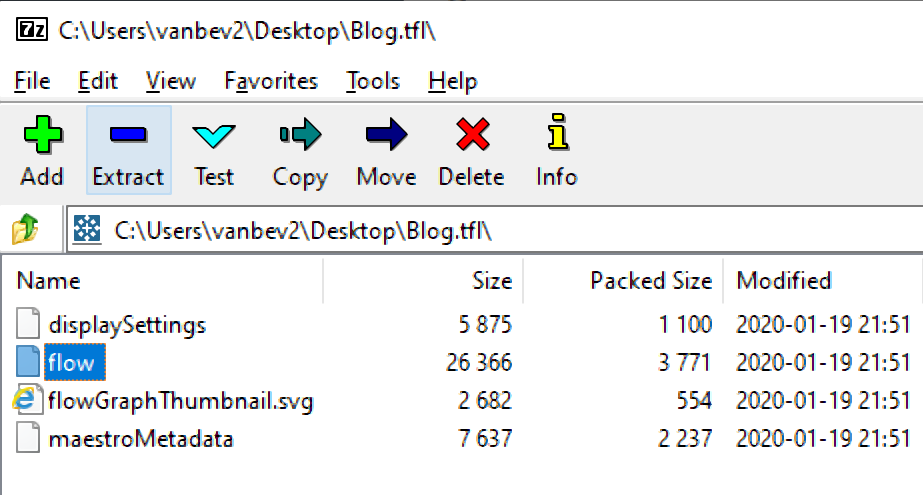

2. Save the flow as a .tfl or .tflx and close Tableau Prep Builder

3. Open the .tfl or .tflx archive by unzipping it (e.g. with 7zip or WinZip) and edit the flow JSON file

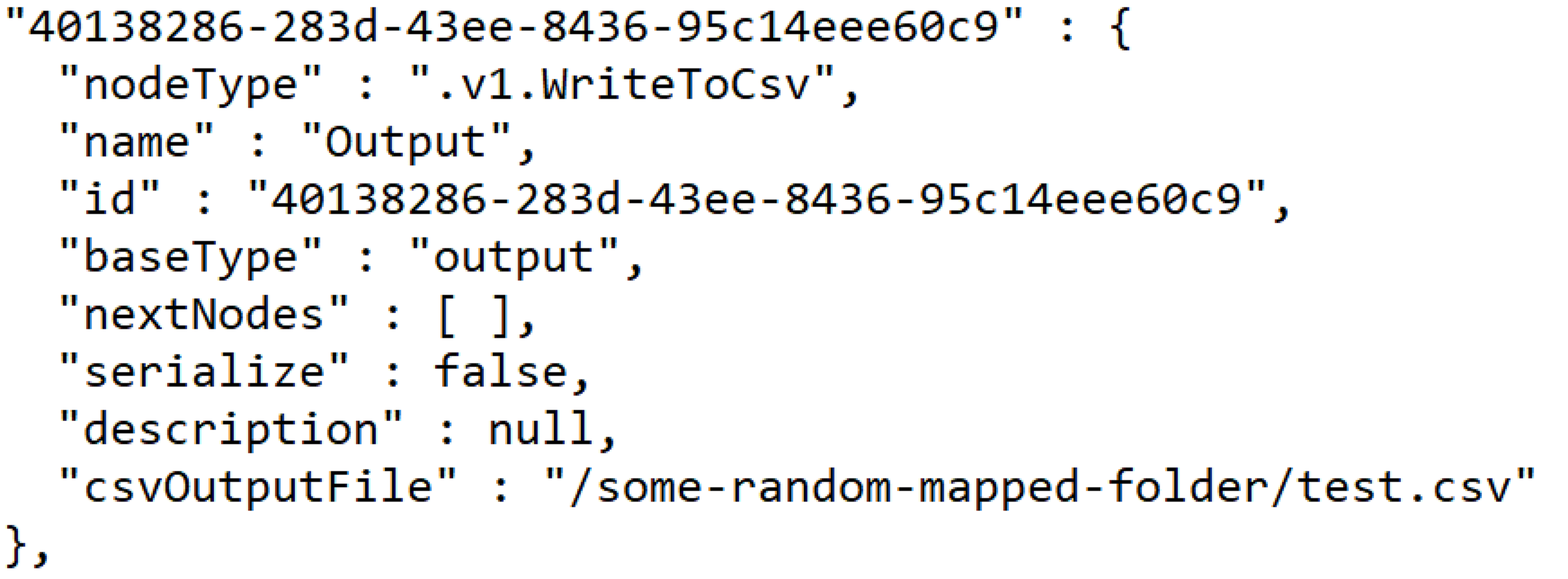

4. In the JSON file, search for NodeType WriteToCsv, containing the csvOutputFile with the underlying path to the csv file. In the parameter csvOutputFile, change the output file linking to the local folder on your computer to the folder mapped to the Google Cloud Storage Bucket (in this case /some-random-mapped-folder/test.csv). Save the flow JSON file in the .tfl or .tflx archive

5. Re-open the flow (.tfl or .tflx file) in Tableau Prep Builder and check if the output folder now links to the mapped drive

6. Publish the flow to Tableau Server

Boom! That’s all. With this trick, you can use prep to combine all your sources of data and store it safely back in a BigQuery database table.

- Viktor

Empower your organization with intuitive analytics

Tableau is designed to put the user first because data analysis should be about asking questions and not about learning software. With built-in visual best practices, Tableau enables limitless visual data exploration without interrupting the flow of analysis.

As the market-leading choice for modern business intelligence, the Tableau platform is known for taking any kind of data from almost any system and turning it into actionable insights with speed and ease. It’s as simple as dragging and dropping.

We are a full-stack provider and integrator, relying on extensive experience and best practices to find your unique optimal set-up allowing you to tell the data stories you are eager to tell.